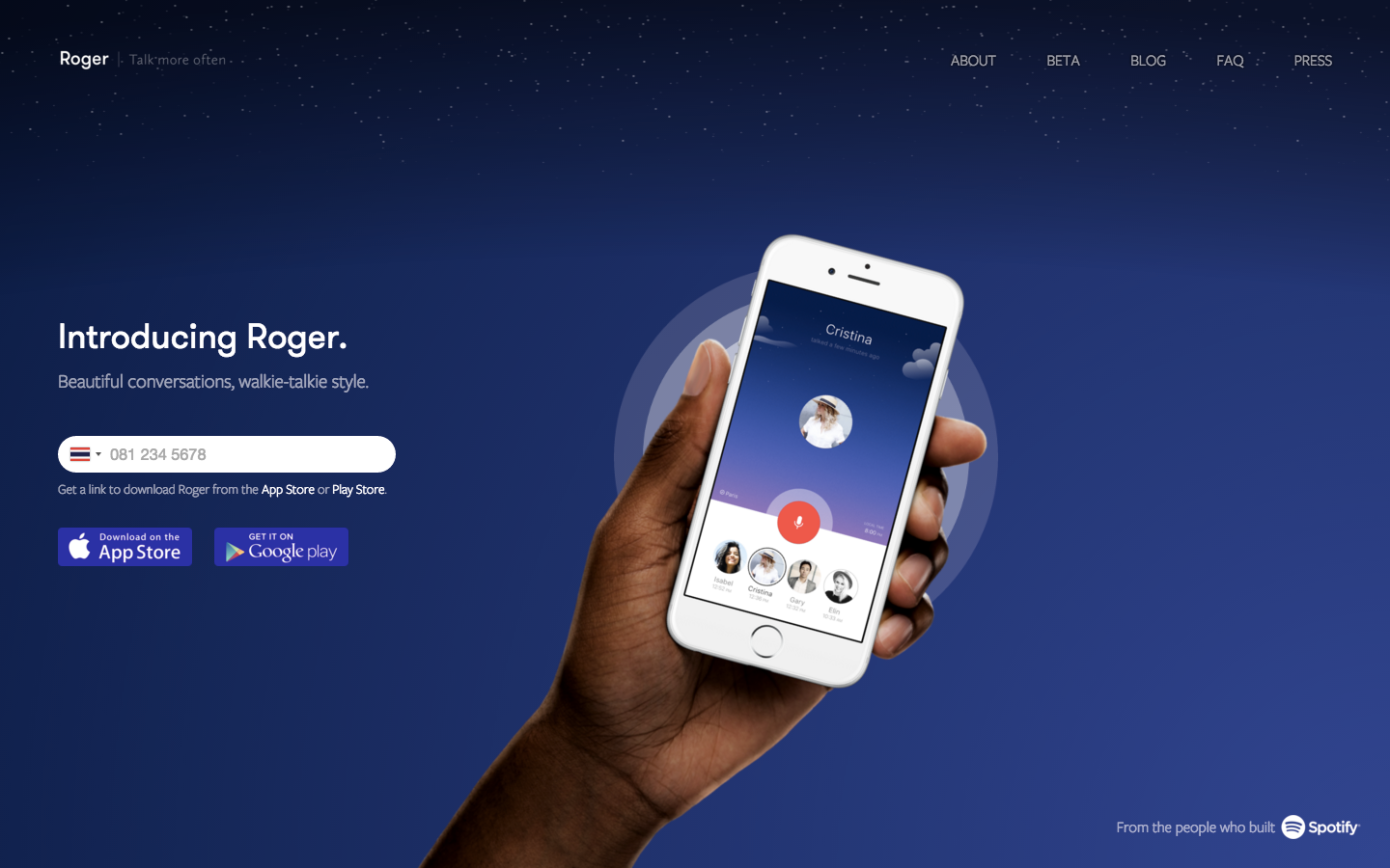

Roger was an asynchronous voice communication app that I helped build in 2015-2016. I joined this startup as an early-stage employee, more concretely as the 5th element of the group. My main role was being the owner of all things Android related - including development, architecture, design guidance, R&D, publishing, etc.

In Roger I saw the opportunity to help develop a product from idea all the way to MVP and past it; the promise of a profound impact and a high bar to meet, while working alongside people from diverse backgrounds such as Spotify, Microsoft, Adobe etc.

My Work

Roger's premise was simple, re-imagine everyday communication as a voice-centric experience. We explored this idea from a many different angles: what does this mean on a human dimension and what sort of ways can we expand on this business proposition and put it to the test. There was not shortage of ideas and experiences this apparent restriction allowed for. Similarly to how ephemerality became a defining characteristic and feature on many apps since Snapchat proved the concept and changed the tech landscape at that; at Roger we were trying to explore a fundamental new way to imagine conversations on mobile devices by making voice-communication its defining feature rather than an addon feature.

On this foundation, we constantly flexed our curiosity and explored new ideas and concepts. Below are some of the ideas I've spearheaded and that stayed with me; among other interesting developments that were part of this project.

The human touch

One of the key ideas that was a pillar for much of what we did was how could we harness the magic of in-person communication, and the many ways we can attempt to bring that into a mobile application, to make communication more human, more natural...

There were many ways in which we tried to capture the moment in the app. The background changed dynamically from night to day and reflected weather conditions from the location of the other person you're talking with. The premise was that one of the first things people talk about in casual conversation is the weather - plus we've added beautifully crafted animations for rain and snow, a clear sky and a bright sunshiny day, and so on. This made the app feel more dynamic and alive. On this I've also made several of this initial launch animations for our iOS app using Apple's SpriteKit. (Till this day I still miss not having a good counterpart of this framework on Android)

Audio engineering

The application wasn't just beautiful visuals, although to power a consistent UX with fluid animations while being mindful of resource load is in itself a very interesting challenge and requires good engineering practices.

The Audio recording component was fully built in house using the low level audio capturing APIs of Android. In this way we could encode the audio on-the-fly, and allowed us to shorten the delay that you get from pressing a button and effectively starting to record. Typically other applications either had this delay, or go around it by resorting to the less ideal approach of having the microphone constantly listening in and then clip the audio from the moment the button is pressed. A practice that is obviously very battery consuming. I've spent many hours fine tuning and experimenting to find the right balance for different audio encoding settings to find the best match of both great audio quality and reasonable payload that could have a faster upload over-the-air. Roger had pristine audio quality, and given the asynchronous nature of the communication we were able to invest more than others on this front, and really bring up the beautiful nuances of a person's voice in a clear sounding way. The difference was, in the very truest sense, very audible. This was not your typical robotic-sounding or muffled voice of other instant communication solutions - which users frequently noted and mentioned. This is in fact a property that its still missed in most of today’s apps - although things have improved a lot since then.

I also explored a less known-capability of mobile devices - binaural recording. Most smartphone devices have at least 2 microphones, which get used so that audio quality is audible during a regular voice call regardless of how you're holding the device, as well for noise cancellation processing which is frequently done at the HW or OS level and transparent to apps. When using low-level APIs on Android, you can leverage these multiple microphones for recording audio with much higher degree of granularity, and you can thus record a stereo audio where each track comes from a different source - the essence of a binaural recording. The positioning of these microphones on a mobile phone for the purpose of binaural recording is not optimal. However, a different type of device also have several microphones, and present ideal positioning - active noise cancelling headphones! These devices have at least 1 microphone per each side, making it a much better positioning for the sources of binaural audio recording. I've worked and shipped this feature as a proof-of-concept into the application. Back in 2016, active noise cancelling headphones were not as commonplace as today, so this feature remained something that was only ever available to a small subset of users.

There were many other small, but essential features that made audio a first class citizen in the product. On the playback side of things, streaming was made possible by leveraging ExoPlayer, which I extended with in-house pieces for audio processing and continuous listening.

Accessibility

Our app's first MVP shipped with basic A11Y functionality from the get-go. This means, just making sure we had labels for visual content so it could be navigated by voice assistant software. Plus, by design we didn't hide content from the UI under menus as a general principle for improved usability. As the app grew in popularity, we realized that a growing cohort of visually impaired users were particularly passionate and vocal about the possibilities of our app. In retrospect, it was an obvious contender to that end; by constraining communication to audio, we had inadvertently created an ideal platform for audio-based communication that offered very few compromises. I decided to explore this further, to build on the moment and increase our user base. I had the pleasure of working with a couple of visually impaired users from the app's community to better understand how we could make the product work better for them.

The result was very enlightening for me as an engineer exploring a dimension of mobile app development, and also this work was very rewarding from a human perspective. Later I had the pleasure to speak at NYC's A11Y meeting on the subject; and I also published a Medium article about some of my findings of this deep dive that you can read about here - Medium article on Roger's A11Y.